Decoding Silicon Evolution at Apple: How Rosetta, M-Series SoCs Influence Choices in Computer Shopping | Tech Insights by ZDNet

Apple Silicon Series Explained: Understanding Rosetta, M1 to M3 Impact on Choosing a Computer

Apple

Apple Silicon, SoC, Rosetta 2, M1, M2, M3, Pro, Max, and Ultra… If you’re interested in Macs , you’ve probably heard one or more of these terms. But unless you’ve been closely following Apple’s technical progress, it might not be clear what they all mean and why they matter to your future buying decisions.

In this article, I answer a relatively common reader question: What does it all mean?

Also: Get M3 MacBook Pro for an extreme mobile desktop. Everyone else, see M2 MacBook Air

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Understanding the CPU

A computer – whether Mac, PC, Linux machine, Raspberry Pi, or even the embedded brain in your microwave -- consists of a set of components. Some manage input, getting data into the machine. Some manage output, presenting that information to you or doing a task (such as popping popcorn or displaying lifelike pictures in your video game). Some components store information, either temporarily or permanently. Some manage connections to one or more networks (Ethernet, Bluetooth, Wi-Fi).

Also: Apple’s M3 iMac disappoints 27-inch display devotees

The processor – the central processing unit (or CPU) – orchestrates all of these elements. The CPU processes sequences of instructions, performs calculations, makes decisions, and tells data to move. In practice, modern computers have many processors, but some are used for special-purpose calculations (like your GPU for graphics). But basically, at the center of it all is the CPU.

Generally speaking, the faster the CPU, the faster the machine. That’s a wild over-simplification because even though a super-fast CPU might process instructions and calculations at warp speed, if it takes the system bus forever to move data around, it doesn’t matter. It’s like driving a Koenigsegg Agera RS in rush hour. The car may be one of the world’s fastest cars, but if the road is bottlenecked, it’s not going anywhere.

Also: Does Apple’s M3 chip obsolete the M1 and M2? Here’s when to upgrade

In reality, balance is important. If you’ve ever built a PC , you probably know that it doesn’t make sense to pair a high-end GPU with a mediocre CPU because the CPU will bog down the graphics. It doesn’t make sense to pair a super-fast CPU with generic, middle-of-the-road memory because the memory bus won’t be able to handle what the CPU throws at it.

This balance issue is, in fact, part of what has made Apple devices so successful. Because Apple (with a few limited exceptions) has always controlled the entire component mix of the machine, the company has been able to balance performance well. For fast machines, that means higher-end components. And that means saving money on parts that would not be fully utilized for slower machines.

Each CPU consists of one or more cores in almost all but the most simple machines. Cores are actually the processing units. A CPU with multiple cores has a traffic management aspect that manages the flow of instructions to each core.

Newsletters

ZDNET Tech Today

ZDNET’s Tech Today newsletter is a daily briefing of the newest, most talked about stories, five days a week.

Subscribe

Multiple cores can increase performance considerably for problems that can be split into parallel processes. Many modern processes work well in parallel, especially graphics, data crunching, AI, ML, and AR-related tasks.

CPU vs. SoC

OK, to review: A computer consists of many components, most of which are integrated circuits (also called chips). Many computers also have many processors. So far, I mentioned the CPU (the central processing unit) and the GPU (the graphics processor).

Also: M3 MacBook Pro with top-of-the-line specs? You’ll be surprised at how far it is under $10,000

Some Mac models include additional, special-purpose processors like the Neural Engine (for AI and machine learning) and a media engine (for non-game video encoding and decoding).

For many years, most powerful computers consisted of all the various system chips located as separate packages, soldered onto a motherboard. Some of the components, like memory and add-on boards, could be inserted via connectors on the board. This allowed for scalability and flexibility, but there are heat and propagation speed challenges with separate discrete packages soldered to a board. Many people who built their own PCs are familiar with this architecture.

Also: Pros should buy Apple’s cheapest M3 MacBook Pro for one reason only

The SoC (or system on a chip) architecture is different. Although the earliest SoC implementations were made for LED watches in the 1970s, they didn’t have processors in them. Instead, modern SoCs can be traced back to the 1990s. That’s when chip-making machines had evolved to where they could create components small enough to hold a whole system on one die. (A die is the actual semi-conductive material that does the work. Most people think of a chip as technically a package that holds a die – sometimes more than one – and the wires to connect the die to the rest of the system. The chip is, in fact, the die inside.)

Unlike the motherboard/CPU/memory model common inside most PCs, iPhones and the latest Macs are based on SoCs. These are chips that contain not only the processor (or processors) but memory, communications, even flash storage, and more – all inside a single package.

Also: A17 Pro deep dive: A look at Apple’s new iPhone 15 Pro chip

SoCs have enormous performance benefits because all the electrical impulses have to travel far shorter distances. Because the distance is shorter, the current used can be considerably less. And because the current is lower, there’s less power used. Less power used means less heat and longer battery life.

It wasn’t until SoCs could be successfully fabricated with tens of billions of transistors that they were suitable for high-performance personal computers. They can be now, so now they are.

Apple Silicon

You’ve heard that Apple has stopped building Intel-based Macs and instead is building them based on something called Apple Silicon. Chips are made out of silicon (number 14 on the Periodic Table, symbol Si ). So Apple Silicon is silicon chips from Apple. But what does that really mean?

Intel’s CPUs are often called x86 chips because they’re derived from the 8086 architecture and instruction set. The very first PCs were built using the 8088 and 8086 processors, way back in the late 1970s. Since then, there have been numerous generations (both by Intel and AMD), culminating in Intel’s Arrow Lake in 2023.

Also: Apple’s M3, M3 Pro, and M3 Max chipsets: Everything you need to know

Apple’s Mac computer line has jumped chip families four separate times . The original Mac 128 introduced in 1984 used a 68000 processor. Apple stayed with the 68K family for 10 years, then jumped to the PowerPC processor in 1994. Apple stayed with PowerPC-based Macs for 12 years, then jumped to PC standard Intel chips in 2006. This was the dawn of the Hackintosh era because most Macs were generally compatible with standard PC architecture components.

Then, in 2020, Apple jumped to Apple Silicon, a chip architecture based on ARM, the processor type used in most smartphones, including iPhones. In fact, the A-series chips, like the A17 Pro used in iPhone 15 Pros, use a similar chip architecture as the Apple Silicon M1s and M2s in current-model Macs and iPad Pros.

For almost all activities, the Apple Silicon-based Macs blow away their equivalent Intel-based Macs in terms of performance, battery life, and heat. Apple’s Silicon-based Macs are an unqualified win for both Apple and its customers.

Also: Apple Silicon and the rise of ARMs: How changing Mac’s processor could change the world

They’re a win for Apple because the company no longer has to rely on Intel for its chips. To be fair, Apple still doesn’t make its own chips. It also doesn’t manufacture its own iPhones. The company relies on a vast supply chain to produce in the quantities it sells. But the company now designs its own chips for the Mac. By removing its reliance on Intel’s innovation teams, Apple can engineer these components to its specific priorities. The result is apparent.

However, the Silicon transition was a win for Apple and its customers solely because of something called Rosetta 2.

Rosetta 2

Intel x86 and Apple Silicon ARM are two vastly different architectures. That means they process data very differently, and they use different instructions to do so.

All major applications are built using computer code. Programmers write code in a higher-level language – basically, a language that humans can read, debug, and maintain. But to a processor, that human-readable language is highly inefficient, so the code is converted into machine code.

Also: M3 Apple silicon is here, and there’s more to it than new laptops and all-in-ones

Machine code lacks the niceties of higher-level languages that make it readable and maintainable by humans. Still, processor chips understand it and can execute it far faster than human-readable code. When you install a program on your computer, you’re usually installing compiled code – code that’s been translated to be read by the processor, not by you or a programmer.

Until 2020, most Mac apps were distributed with code compiled for Intel processors. If that code was handed to an Apple Silicon-based Mac, the M1, M2, or M3 processor wouldn’t be able to do anything with it. The computer would respond with the machine’s version of “Eh?”

The difference in architecture is profound. The languages and even the structure of the languages understood by Intel processors and Apple Silicon processors are wildly different. This was also the case going from 68000 to PowerPC and from PowerPC to Intel.

In other words, Apple engineers have solved the “processors-don’t-speak-each-others’-languages” problem a number of times before. They’ve solved this by using a combination of translation and emulation.

Also: What’s new in Parallels Desktop 19, the best way to run Windows on your Mac

With new Apple Silicon machines, this is done by Rosetta 2. When you try to open an Intel binary on an M-based machine, MacOS hands it off to a program called Rosetta 2. Rosetta 2 does an on-the-fly translation of the x86 code into Apple Silicon code, saves it, and then runs the translated code. Some elements are emulated while other elements are completely transcoded.

The first time you run an Intel-based program, it may take a little while for the program to start running. That’s because Rosetta is doing a translation pass first. Subsequent runs will then be faster because the translation has already been done.

The first time you run your first Intel program on an M-based Mac, MacOS may ask you if you’d like to install Rosetta 2. This is a definite yes because it opens the door to all those Intel apps that you might already have. MacOS will pull down the Rosetta 2 code from Apple, install it on your computer, and become able to run Intel-based applications. (Historical note: Rosetta was originally used to translate from PowerPC to Intel more than a decade ago. That’s why we’re at Rosetta 2.)

After being translated with Rosetta, some Intel-based applications will actually run faster on Apple Silicon than they used to run on your Intel-based Mac.

Also: How to get true window snapping in MacOS

Let’s talk about developers for a moment. Developers code using higher-level languages, but they have to compile their projects for each architecture. Since 2006, most developers have been compiling for Intel-based machines. When Apple introduced the M1, the company made the process of compiling that same code to Apple Silicon relatively easy. But it’s still a non-trivial investment in developer time to create the new version.

While most major developers have made the jump, others (either because it’s too big an investment, they have other priorities, or they don’t see a good business reason) are still shipping Intel-only apps. Some applications that users rely on are older, not maintained, and will never be updated for Apple Silicon.

That’s why the Rosetta 2 translation/emulation capability was so critical to consumer acceptance of the new Apple Silicon architecture. When the M1 first came out, developers were understandably skeptical of how well it would do compared to Intel. They reasoned that if Intel apps wouldn’t run – or ran very poorly – users (who are pretty reliant on the software they are used to) wouldn’t buy the new Apple Silicon-based machines.

But now that Apple Silicon has been out for over three years, more developers are investing the time to create Apple Silicon-native apps.

Also: The best laptops: Apple, Dell, Microsoft, and more compared

It’s a win for both developers and users when code is compiled for Apple Silicon. In general, native Apple Silicon programs run faster than Rosetta-translated ones. So if a developer recompiles their apps for Apple Silicon, they get an almost automatic performance boost.

I found, for example, that Rosetta-translated Chrome was OK but a bit sluggish. When I replaced that with the Apple Silicon version of Chrome, it was far faster. For help finding out which of your applications are Native, Intel, or “Universal” (meaning the application contains native code for both Intel and Apple Silicon), read “Sluggish apps on your M1 Mac? Check this first for a possible fix .”

M1, M2, M3, Pro, Max, and Ultra

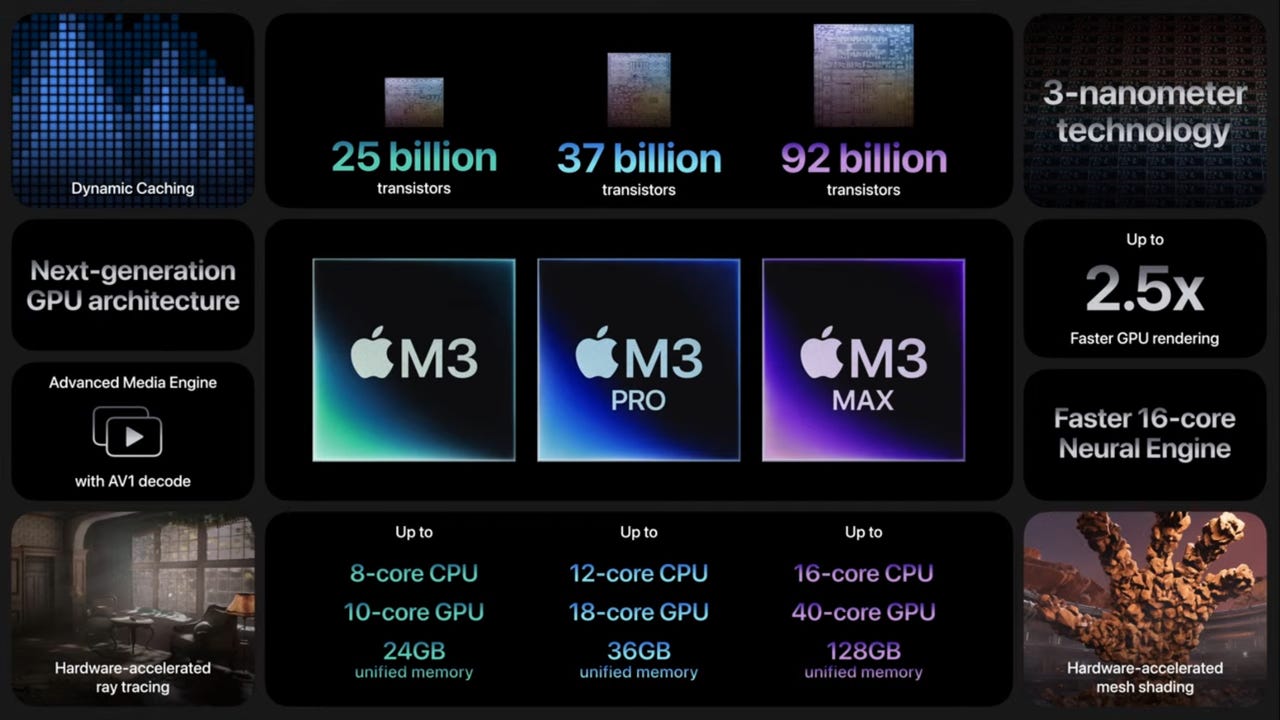

M1 was the first Apple Silicon processor model that Apple used inside its computers. As of this writing, Apple has shipped an M2 generation of processors, and just announced the M3 generation . In addition to the base-model processors, Apple has created higher performance versions, which are labeled Pro, Max, and – for the M1 and M2 – Ultra. We haven’t seen the Ultra on the M3 family, at least yet.

Also: Inside the new Mac mini: Does Apple’s M1 architecture really leave Intel behind?

The M1 is the original Apple Silicon processor for Macs, announced in 2020. The M1 Pro and Max were announced in the fall of 2021. All M1 processors have four different types of cores: performance cores (power computing), efficiency cores (slower, for more pedestrian work, but take less power), GPU (for graphics performance), and Neural Engine (for AI and machine learning).

The M1 Ultra, which is essentially two M1 Max processors fused together, was launched in March 2022. The M2 was introduced in June 2022 with its Pro and Max siblings launched in January of this year. Apple launched the M2 Ultra six months later. Now, just over four months later, in October 2023, Apple announced not only the M3 but the M3 Pro and M3 Max processors as well.

The difference between the models is in how many cores each has. The base M1, M2, and M3 processors each has four performance cores and four efficiency cores. The M1 Pro and M1 Max have only two efficiency cores, but either eight or 10 performance cores (depending on how much you want to spend). The M2 Pro and Max double the efficiency cores to four, but top out at eight performance cores. And the M3 Pro and M3 Max change up the core calculus, with the M3 Pro sporting six each of performance and efficiency cores, while the M3 Max goes for four efficiency cores and a max of 12 performance cores.

All of the processors, in each of their performance grades, have 16 neural cores, except the M1 and M2 Ultra, which have 32. The biggest difference is in GPU cores. The base M1 has seven or eight. The M1 Pro has 14 or 16. And the M1 Max has 24 or 32. The M1 Ultra has 64 GPU cores while the M2 Ultra can jump that up to 76 cores. Adding it up across all core types, the max M2 Ultra comes with 132 cores!

As for the M2, M2 Pro, and M2 Max, this family sports 10, 19, and 38 cores, respectively. The M3 family changes things up slightly, with M3, M3 Pro, and M3 Max rocking 10, 18, and 40 GPU cores each. Here’s a handy table that compares everything:

David Gewirtz/ZDNET

The M-series processors are SoCs, so memory and storage come right on the chip. Depending on the chip, you can go from 8GB RAM up to 192GB RAM and 256GB of flash storage up to a whopping 8TB on the 16-inch MacBook Pro with M3 Max. That additional storage will cost you, to the tune of a few thousand bucks more. But if you need it, you need it.

The sizes of the chips differ considerably. The base chip is about a quarter the size of the Max model. This makes sense because the Max crams a lot more onto its die. It got smaller between the M2 and M3 generations when the die-production process went from 5-nanometer to 3-nanometer components. But for now, it’s just interesting to note that the M3 Max has 92 billion transistors, while the double-chip M2 Ultra has 134 billion transistors – all in the space of about the size of a fingernail.

Final thoughts

M1, M2, and M3 are all Apple Silicon. Each just has a little more oomph than the last. When you look at purchasing your next Mac, you’ll want to do a typical price/performance analysis to decide which of those SoC chips meets your needs and your budget.

Also: How Apple’s new M3 silicon compares to the M1 and M2 chips

Now that you know more about what’s going on under the hood, are you planning to move to Apple Silicon? Have you already done so? If so, what have your experiences been with translating Intel apps to Apple Silicon with Rosetta 2? Did you even know that was happening? Do you have your eye on a sweet M3 Max? Have you been running M1 or M2-based machines? Let us know in the comments below.

You can follow my day-to-day project updates on social media. Be sure to follow me on Twitter at @DavidGewirtz , on Facebook at Facebook.com/DavidGewirtz , on Instagram at Instagram.com/DavidGewirtz , and on YouTube at YouTube.com/DavidGewirtzTV .

Apple

Every iPhone model that will be updated to Apple’s iOS 18 (and which ones can’t)

M3 MacBook Air vs. M2 MacBook Air: Which Apple laptop should you buy?

Why you shouldn’t buy the iPhone 16 for Apple Intelligence

I uncovered 8 cool ways to use LiDAR on an iPhone and iPad

- Every iPhone model that will be updated to Apple’s iOS 18 (and which ones can’t)

- M3 MacBook Air vs. M2 MacBook Air: Which Apple laptop should you buy?

- Why you shouldn’t buy the iPhone 16 for Apple Intelligence

- I uncovered 8 cool ways to use LiDAR on an iPhone and iPad

Also read:

- [New] Quick Share of Pics? Here's How You Do It Right for 2024

- [Updated] In 2024, Top 10 Overlooked, Yet Best Free Speech Transcribers for Mac

- A Simple Tutorial Starting Screen Recording on Mac for 2024

- Boosting Cursor Signal Strength in Win 11 OS

- Comprehensive Review of Google's AR Stickers for 2024

- Deciphering Twitter Terminology: The Meaning of 'Retweet' Versus 'Re-Tweet'

- Effective Fixes to Address the WLANAPI.DLL Error and Improve System Stability

- How to Correctly Handle 'Missing or Not Detected' JScript.dll Problems on Your PC

- How to Export Spotify Playlists to Text Files

- In 2024, Ultimate Techniques for YouTube Video Format Switching

- In-Depth Analysis and Insights on the Line Messaging Service

- Movavi

- Overcoming Startup Failures: Solving Battlefield 4'S Launch Issues on Windows Machines

- The Ultimate Techniques For Sanitizing Your TV Clicker Safely & Effectively

- Trouble with Netflix? Discover 19 Essential Tips to Restore Functionality on Your LG TV Set

- Troubleshooting Microsoft Word: When Files Refuse to Launch

- Watch Our Handpicked Favorites for Family Viewing on Amazon Prime Video (July 2024)

- Title: Decoding Silicon Evolution at Apple: How Rosetta, M-Series SoCs Influence Choices in Computer Shopping | Tech Insights by ZDNet

- Author: Andrew

- Created at : 2024-10-06 17:40:01

- Updated at : 2024-10-07 18:04:54

- Link: https://tech-renaissance.techidaily.com/decoding-silicon-evolution-at-apple-how-rosetta-m-series-socs-influence-choices-in-computer-shopping-tech-insights-by-zdnet/

- License: This work is licensed under CC BY-NC-SA 4.0.